Hello, and welcome to my personal hell blog!

I will be covering an assortment of topics, tips & tricks, a few gotchas, and downright randomness in this blog. Sit tight, grab a cup of coffee [or tea], and prepare for some of the most disorganized collection of information, hopefully laced with a dash of "a-ha! so that's what that means..." every now and then

I'm X0RW3LL. I'd like to think I have absolutely no idea what I'm doing, but that's okay; no one really does. However, every now and then, every last one of us faces a point when they have to make a choice whether or not they'd like to keep things the way they are. I'm very curious by nature, and that's what I use to fuel this hunger for knowledge

In making this blog, I hope I can inspire anyone reading it to push for more understanding of things. This is not a guide by any means, nor should you treat it as such; it's simply my way of going about problem solving (where research topics are concerned), or simply an attempt at immortalizing a snapshot of this meat suit's stream of thoughts. Who knows; maybe someday, a highly advanced intelligence will come across this and look a little something like this

That being said, let's dive right into it. Check out the topics that might interest you most using the chapter outline in the collapsible left-side table of contents. This website is powered by mdbook, so feel free to use whichever dark/light mode theme you prefer

Changelog

2025-02-21

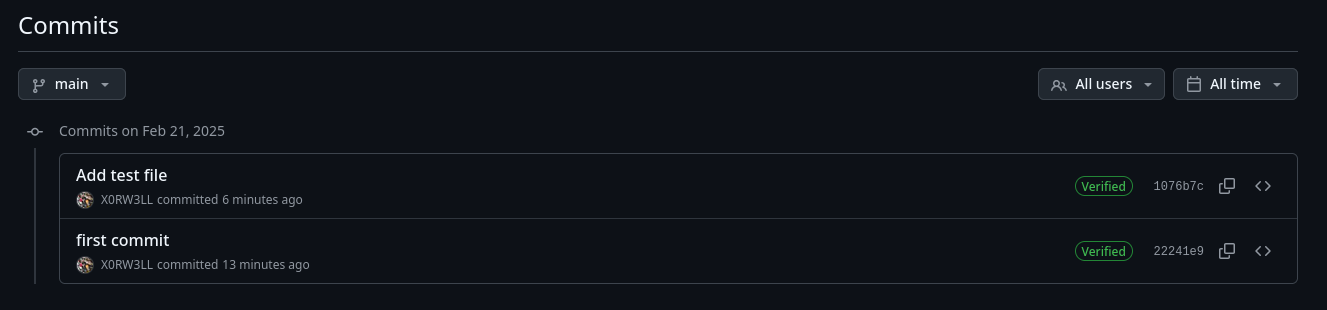

- Git: added a new entry for Git

2025-02-13

- Python: added a new entry for Python

- Removed {misc,thoughts}

- Unreferenced dead links

- Reordered chapter index

2024-12-26

- apt-patterns: added apt-patterns demo

2024-09-14

- Exhibit D: Look how they massacred my perms: added permissions demo

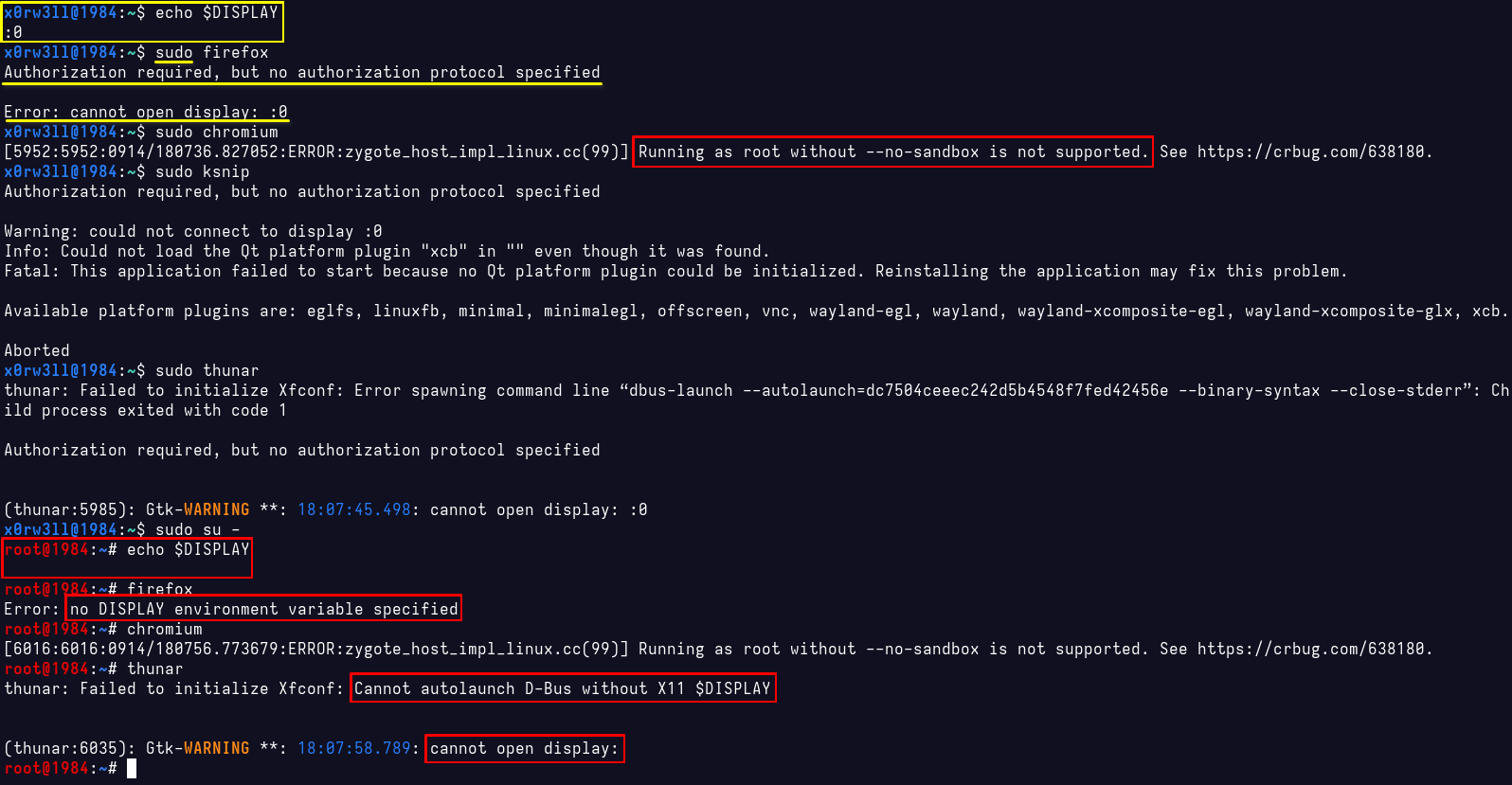

- Exhibit E: Can't see me: added broken DISPLAY demo

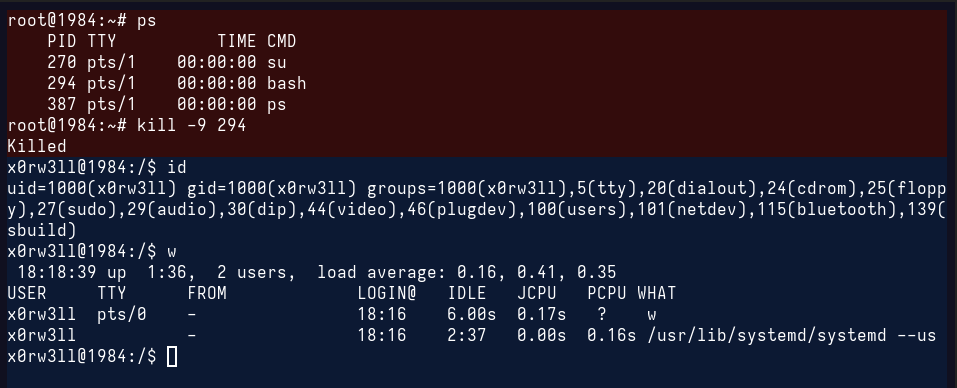

- Exhibit F: Merciless killing: added demo for killing wrong PIDs by mistake

2024-09-13

- Exhibit C: The case against /usr/local: added demo for Python/pip issues

2024-08-05

- Tips, Tricks, and Gotchas - The Root of All Evil: added the long-awaited yelling at root lovers

2024-02-25

- DANGER ZONE: introduced a new chapter; thoughts on things

2024-02-15

- Music: updated highlights

- Series TV: added a new comedy show

- Tips, Tricks, and Gotchas - Bash: added Bash tips

- Tips, Tricks, and Gotchas - Functions & Aliases: added Bash functions and aliases

The Root of All Evil

Prologue

The year is 2024 AD. We have flying cars (sort of), Quantum technology, potential cures for cancer, new and powerful space telescopes, and users still use sudo/root for EVERYTHING. It's as if the entire world moved on, and a significant subset of users decided to stick to the past and horrible opinions/advice some other misinformed users left some 5, 10, or 15 years ago. If you're given this link, you need to read on. Stick around because there are real demos which will deliver the point straight to your proverbial doorstep

What the hell is root, sudo, or any of that witchcraft?

Let's think of your operating system as a car for a moment. For the sake of simplicity, we're doing good ol' pre-2000s tech, so no Engine Start/Stop buttons, nothing fancy; as simple as it is to insert the key and switch. As an "unprivileged user", you're free to turn on the cabin lights, turn the steering wheel to a certain degree, move a few things around here and there, but not much beyond that. What if you need to, say, start moving? Well, you switch the key to ignition, start the car and off you go. That right there is a "privileged" operation, meaning you need to 1) have the key, and 2) actually start the car

Now, imagine how absurd it would be to start the engine just to turn on the cabin lights, only to switch it off after. This is your brain on drugs what you're doing when you do something like sudo ssh user@host, root@kali~:# echo "I am going to do EVERYTHING as root because I can"

Back to starting the engine just to turn on the cabin lights analogy. Let's think about why that's a bad idea:

- Fuel, oil, and coolant are wasted

- Environment's polluted over something that never warranted that action

- Gears, belts, spark plugs, and any/all parts involved in the process are worn out (however insignificant this might be, it ultimately adds up on the long run)

- Battery life's degraded

- ... (you get the idea by now)

Now, let's leave the garage and come back to technicalities. Remember: turning on the cabin lights is any action your unprivileged user can do, and starting the engine and driving is anything your privileged user(s) can perform. In techy-techy terms, privileged actions require privileged access

But what are privileged actions? Here are a few examples:

- Managing system services

- Modifying system files

- Managing packages

- Sending RAW packets

- Modifying files belonging to other users/groups

- Capturing packets

- [Un]loading kernel modules

- Modifying kernel command-line params

- Modifying boot entries

Anything that does not require privileged access SHOULD NOT be given one. Here are some examples:

- Starting the browser to navigate the World Wide Web (if you do this as a privileged user, please throw your laptop out the window until you understand why that's terrible)

- Reading, writing, executing, and/or removing (user|group)-owned files

- SSH, SMB, FTP, TELNET, SMTP, SNMP, HTTP, ... access

- Managing user services

- Running most programs (unless otherwise prompted for privileged access)

- ... (the list goes on)

What's actually so bad if I perform everything I want while privileged?

Where do we even begin? Let's populate a list of things:

- Dear baddies, here's my entire computer at your disposal, with love xoxo (see Exhibit A: Mi Kali, su Kali <3)

- Accidental data loss (

sudo rm -rf /oops/i/didnt/mean/to/remove/the/entire/etc/directory111one1eleven1) (see Exhibit B: O' Filesystem, where art thou?) - Python import path conflicts (see Exhibit C: The case against /usr/local)

- Broken permissions? Who needs 'em anyway, amirite? (see Exhibit D: Look how they massacred my perms)

- Wait, wtf is

DISPLAY :0? And why can't root just "open" it? (see Exhibit E: Can't see me) - Oh, you killed the wrong PID? Sucks to be you (see Exhibit F: Merciless killing)

Let's face it: we ALL make mistakes. Some of these mistakes might be forgivable, and some others...not so much. Deleting a text file that just says "test" is meh, but deleting an entire directory like /usr, /boot, /etc, or whatever else by mistake? That's an unforgivably expensive mistake with no one to blame but yourself. You might think "oh, I definitely know better than all those losers that don't know what they're doing", and you'd still be absolutely wrong. Everyone makes mistakes; you, me, that guy over there, that girl on the other side of the globe, those people studying arctic climate--everyone. You're certainly not better, but if you let your ego take you down that joyride, by all means enjoy it until you don't.

And yes, some programs WILL NOT actually work as sudo/root because of the different shell/environment variables available to your user vs sudo/root. The most critical thing that can happen to you, from a security standpoint, is running unknown binaries/code 1) as root, and 2) without FULLY understanding what they do. Sit tight; this is gonna be demoed next

Ugh, I just hate having to type my password every N minutes

Stop being lazy. I'm not gonna sugarcoat anything here. I'd rather type my complex password 300 times a day than take the risk with any of the aforementioned mishaps/dangers. The "convenience" factor is just not worth it for me. If you absolutely must, maybe extend your token validity (NOT recommended, but up to you), or turn to other means of authentication (again, up to you; we're not covering those here)

Closing thoughts

You're a supposed "security professional". If I were your client, and you told me that running this web application as root is terrible practice whilst having root@kali:~# in your screenshots, I'd never be inclined to do any future business with you. Plain and simple. If you're not practicing what you're preaching, your legitimacy will be questioned. Again, I'm not shying away from calling out bad practices. If you wanna get places in this industry, learn to do things correctly. If not for anything else, at least for yourself and your own systems' sake, and more importantly, for doing things the right way. Additionally, this entire topic was already covered some 5 years ago in this blog post by the Kali dev team. Please make a habit of reading docs and blog posts; they're there to help you

Exhibit A: Mi Kali, su Kali <3

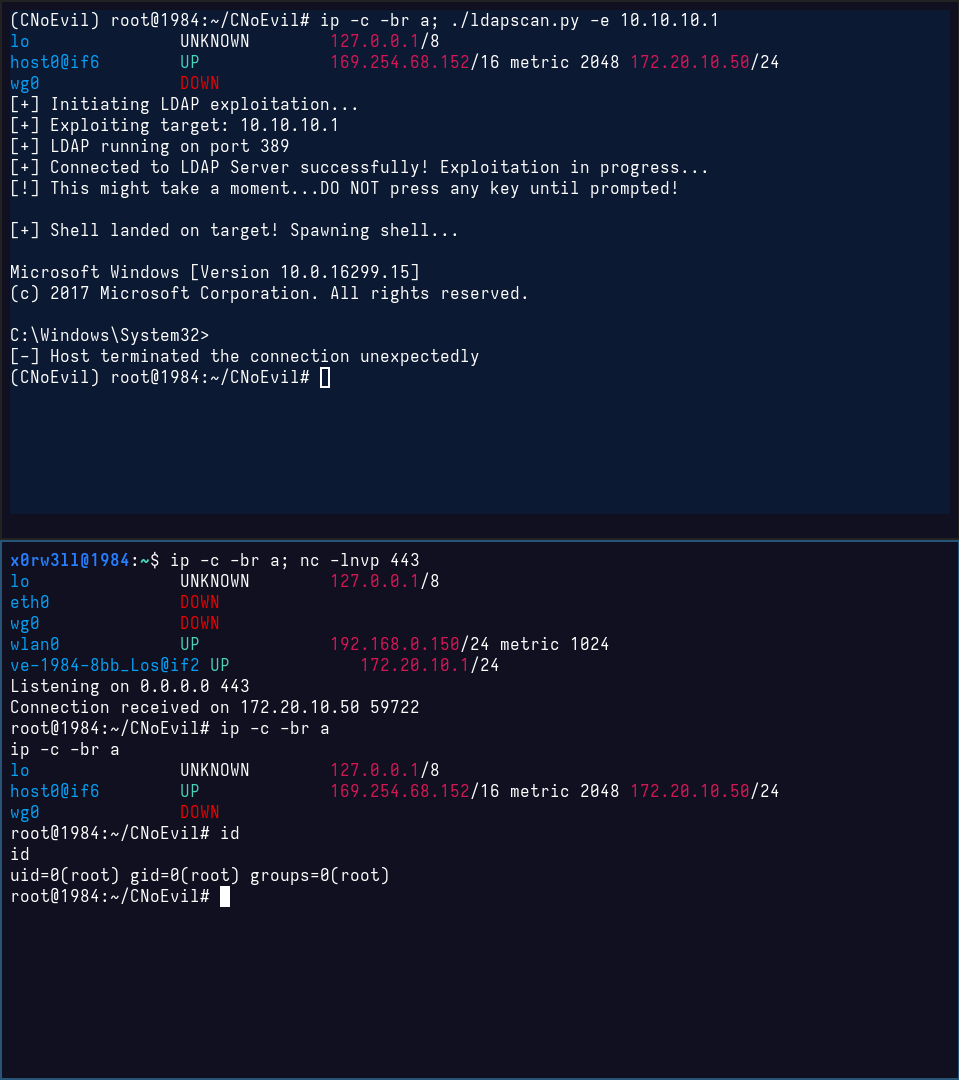

In this quick demo, we will show how an attacker can quickly (and effortlessly) gain a root shell on your system because you decided to clone a questionable Git repository and executed the script(s)/binaries it provided as root

Enter: CNoEvil

CNoEvil is a fictitious repository that provides an "LDAP exploitation and scanning" script. The source code thereof will not be released, but I will walk you through what I did. The instructions shown in the README are as follows:

root@kali:~# git clone git@github.com:X0RW3LL/CNoEvil.git

root@kali:~# cd CNoEvil/

root@kali:~/CNoEvil# pip3 install -r requirements.txt

root@kali:~/CNoEvil# python3 ldapscan.py

LDAP scanner that utilizes state of the art

enumeration technologies and some fancy

military-grade AES-256 encryption that

obfuscates request payloads and hides

your traffic like the world is after you

Usage: ldapscanner.py [options] <IP>

Options:

-h: print this help message

-e: exploit mode

-d: DoS mode

-l: LDAP scanner

...

We can tell that the author implied running those steps as root as shown in the PS1 prompt root@kali:~#. Moreover, there's a considerable number of users who login as root by default anyway, and that's the audience I'm addressing. Now, let's have a look at the requirements.txt file:

urllib3==1.26.0

pytest-httpbin==1.0.0

requests>=2.28.2,!=2.26.0,!=2.27.1,!=2.27.0

./dist/requestd-2.28.2-py3-none-any.whl

ldap3>=2.5,!=2.5.2,!=2.5.0,!=2.6,!=2.5.1

pyOpenSSL>=21.0.0

colorama==0.4.6

Notice anything yet? The requirements list a typosquatted Python package requestd that was shipped along with the repo when it was cloned. That typosquatted malicious package does not need anything extra from the user's end; it fires the reverse shell as soon as the package is imported. Best part is: package is imported along with ANY selected argument (with the exception of -h to not raise suspicions). Even better: there's no explicit import statement for requestd--that import statement was rather obfuscated (however rudimentarily for the sake of this demo)

x0rw3ll@1984:~/dev/CNoEvil$ wc -l ldapscan.py

1812 ldapscan.py

x0rw3ll@1984:~/dev/CNoEvil$ grep import ldapscan.py

import os

from time import sleep

from multiprocessing import Process

import sys

sale, or importing the Program or any portion of it.

make, use, sell, offer for sale, import and otherwise run, modify and

x0rw3ll@1984:~/dev/CNoEvil$ grep requestd ldapscan.py

x0rw3ll@1984:~/dev/CNoEvil$

You'd think an 1812-line script would be something solid, but no. You absolutely must scrutinize it to the core. If you inspect the source code, you'll find the script doesn't really do anything at all. No, really, it doesn't. It adds random sleeps, some bogus shell output, while it calls back to the attacker's machine. Let's look at an example snippet:

def ldap_exploit(ip=''):

print('[+] Initiating LDAP exploitation...')

sleep(3)

print('[+] Exploiting target: {}'.format(ip))

sleep(1)

print('[+] LDAP running on port 389')

sleep(2.6)

print('[+] Connected to LDAP Server successfully! Exploitation in progress...')

print('[!] This might take a moment...DO NOT press any key until prompted!')

sleep(0.5)

if os.fork() != 0:

return

print('''

[+] Shell landed on target! Spawning shell...

Microsoft Windows [Version 10.0.16299.15]

(c) 2017 Microsoft Corporation. All rights reserved.

C:\Windows\System32>''')

print('[-] Host terminated the connection unexpectedly')

enc_key = xor(IV)

It really is just a fake shell printer (trololol, amirite?), but you wouldn't know that without reading the script, now would you? Let's move on to the part where you finally get owned. You'd think that running the code in a Python virtual environment would save you, but that couldn't be further from the truth. Venvs serve a specific purpose, and it has absolutely nothing to do with security

Demo time

In this demo, I am using my Kali on metal as the attacker machine (bottom terminal window), and a containerized Kali based on my filesystem (top terminal window). My attacker IP is 172.20.10.1, and the would-be victim IP is 172.20.10.50. Moreover, the victim container is running a Python venv called CNoEvil, following the README instructions and running everything as root. The victim container has a lighter/blue background color for visual distinction

Moving forward

All the above being said and done, please heed this warning as you could very well become the next victim to such attacks

- Do not login as root

- Do not execute scripts you cannot vet (or have them vetted by some reputable, trustworthy entity)

- Do not clone repositories as root/with sudo

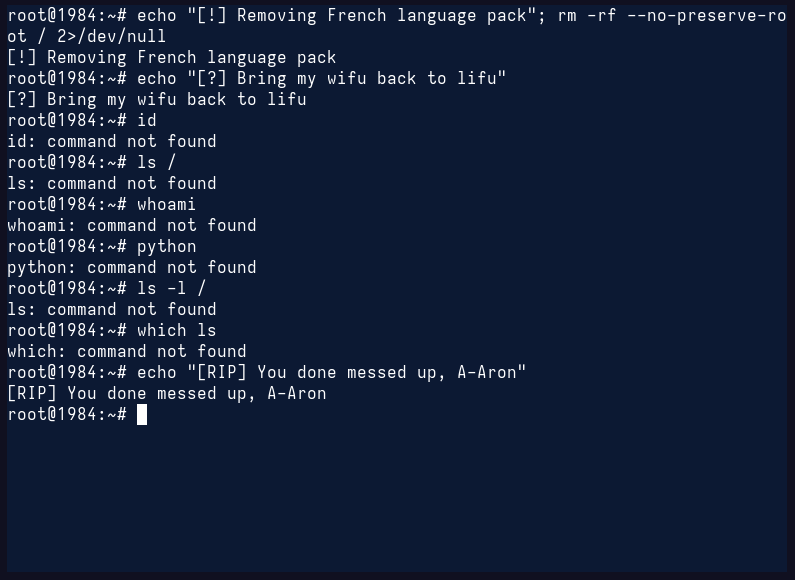

Exhibit B: O' Filesystem, where art thou?

Enter: rm -rf / --no-preserve-root

We've all seen it, and we've laughed at all the memes, but we cannot disregard the fact that it is a very real thing that can (and will) happen to everyone. I'm not talking specifically about rm -rf / --no-preserve-root, but the fact that everyone's bound to accidentally delete something. Let's destroy some filesystems, shall we?

I will be demoing removing the entire filesystem inside the ephemeral container, and that's enough to demonstrate the extent of the damage caused. Apply this to any directory that shouldn't have been mistakenly deleted in the first place, and you get the point

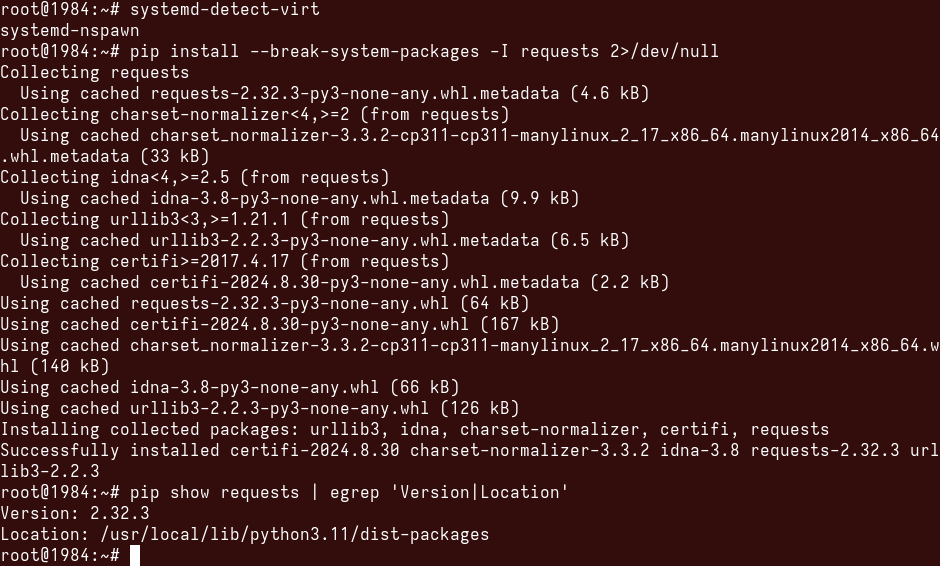

Exhibit C: The case against /usr/local

Prologue

This is a special one. Probably one everyone hates the most. The crown jewel of the most common issues with privileged execution of commands. It's no secret that Python is one of the most infamous languages out there, and it's no secret either that almost everyone knows what pip is; Python's package manager. In the past, pip might not have been such an issue (for the most part). Nowadays, however, pip is synonymous with nothing but "trouble"

Import Paths

Let's talk about your $PATH environment variable for a moment. When you type the name of a program, say, nmap, how does the shell know to autocomplete the binary name, let alone launch it?

Autocompletion technicalities aside, at a very basic level, the shell will start searching the $PATH environment variable for possible locations that hold said binary. This is certainly better than having to type, say, /usr/bin/nmap or /usr/sbin/poweroff. Neat, right?

Now, Python works on the same principle, more or less. When you import a library, Python will look for the default path where packages get installed (i.e. /usr/lib/pythonX/dist-packages/), where X is the version. Let's see that in action with a library everyone knows; requests. python3-requests is installed on Kali by default as it's a dependency for a considerable number of tools, so it's a good candidate for this demo

x0rw3ll@1984:~$ systemd-detect-virt

none

x0rw3ll@1984:~$ pip3 show requests

Name: requests

Version: 2.31.0

Summary: Python HTTP for Humans.

Home-page: https://requests.readthedocs.io

Author: Kenneth Reitz

Author-email: me@kennethreitz.org

License: Apache 2.0

Location: /usr/lib/python3/dist-packages

Requires:

Required-by: censys, crackmapexec, dropbox, faraday-agent-dispatcher, faraday-plugins, faradaysec, netexec, pyExploitDb, pypsrp, python-gitlab, pywinrm, requests-file, requests-toolbelt, theHarvester, tldextract

x0rw3ll@1984:~$ python3 -c 'import requests; print(requests)'

<module 'requests' from '/usr/lib/python3/dist-packages/requests/__init__.py'>

x0rw3ll@1984:~$

As we can see from the above output, this is running on metal, with no externally-managed packages installed via pip. The default path, as previously mentioned, is indeed /usr/lib/python3/dist-packages. We can also confirm this with pip list --path /usr/lib/python3/dist-packages

x0rw3ll@1984:~$ pip list --path /usr/lib/python3/dist-packages/

Package Version

------------------------------ -------------------------

aardwolf 0.2.8

adblockparser 0.7

aesedb 0.1.3

aiocmd 0.1.2

aioconsole 0.7.0

...

zope.deprecation 5.0

zope.event 5.0

zope.interface 6.4

zstandard 0.23.0.dev0

pip happily lists all the packages installed in that location, which is everything currenlty installed with its package name prefixed with python3-

Enter: Trouble

We'll now switch contexts; we'll spin up an ephemeral container based on the host file system so that we retain the same packages and everything in its place, and not mess up the actual host with potentially breaking changes. Additionally, we'll be running everything as root for maximum effect. I will be using run0 instead of sudo to switch users so systemd can give us the nice, bright red background color. We'll use pip to install requests again with switches instructing it to break system packages, and ignore currently installed packages. This is done for demonstration purposes only, and should not be used for the trouble that ensues. Recall from the output above showing requeststhat the currently installed version is 2.31.0

Note how pip now reports the package being installed to /usr/local/lib/python3.11/dist-packages instead of /usr/lib/python3/dist-packages? Let's double-check whether the system-wide installation of python3-requests still exists

root@1984:~# pip list --path /usr/lib/python3/dist-packages | egrep '^requests '

requests 2.31.0

root@1984:~# pip list --path /usr/local/lib/python3.11/dist-packages | grep requests

requests 2.32.3

root@1984:~#

Now we have a real problem: we have two different versions of requests, namely 2.31.0 and 2.32.3 installed in two different locations; /usr/lib/python3/dist-packages and /usr/local/lib/python3.11/dist-packages. What does this mean? Well, different programs/scripts will be extremely unreliable when it comes to importing requests. They might end up importing one version or the other, depending on who's calling, where, under which context, etc. Moreover, some tools will have exactly equal Depends. That means that the tool is designed to work with a specific version of a library. This might be due to deprecated APIs, or other decisions made by the tool developer(s)

Demo

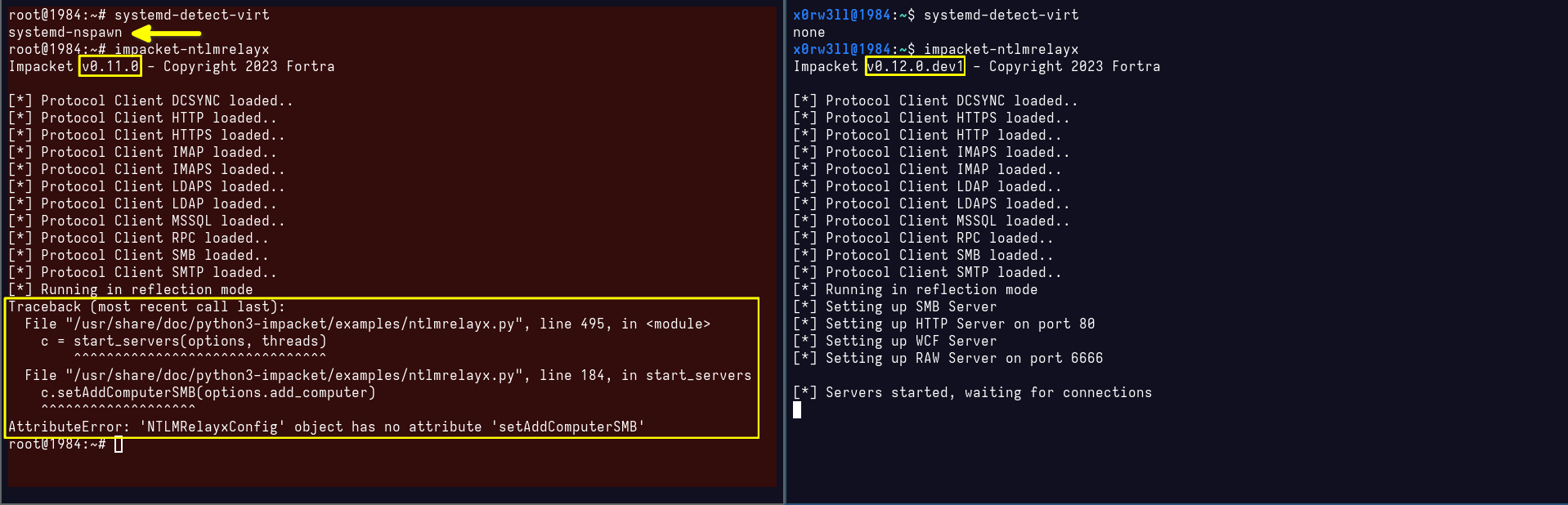

Let's see that in action with a package that will indeed throw some functionality-breaking errors; impacket. Here's what we have so far (before installing the externally-managed impacket package)

root@1984:~# apt policy python3-impacket; apt rdepends python3-impacket; pip show impacket

python3-impacket:

Installed: 0.11.0+git20240410.ae3b5db-0kali1

Candidate: 0.11.0+git20240410.ae3b5db-0kali1

Version table:

*** 0.11.0+git20240410.ae3b5db-0kali1 500

500 https://kali.download/kali kali-rolling/main amd64 Packages

500 https://kali.download/kali kali-rolling/main i386 Packages

100 /var/lib/dpkg/status

python3-impacket

Reverse Depends:

Depends: netexec (>= 0.11.0+git20240410)

Depends: wig-ng

Depends: spraykatz

Depends: smbmap

Depends: set

Depends: redsnarf

Depends: python3-pywerview

Recommends: python3-pcapy

Depends: python3-masky

Depends: python3-lsassy

Depends: python3-dploot

Depends: polenum

Depends: patator

Recommends: openvas-scanner

Depends: offsec-pwk

Depends: impacket-scripts (>= 0.11.0)

Depends: koadic

Depends: kali-linux-headless

Depends: autorecon (>= 0.10.0)

Depends: hekatomb

Depends: enum4linux-ng

Depends: crackmapexec

Depends: coercer

Depends: certipy-ad

Depends: bloodhound.py

Name: impacket

Version: 0.12.0.dev1

Summary: Network protocols Constructors and Dissectors

Home-page: https://www.coresecurity.com

Author: SecureAuth Corporation

Author-email:

License: Apache modified

Location: /usr/lib/python3/dist-packages

Requires:

Required-by: crackmapexec, dploot, lsassy, netexec

root@1984:~#

As we can see, python3-impacket has quite a number of reverse dependencies that may very well end up breaking. Let's break some!

After installing the package with pip as root, we get the following information

root@1984:~# pip show impacket

Name: impacket

Version: 0.11.0

Summary: Network protocols Constructors and Dissectors

Home-page: https://www.coresecurity.com

Author: SecureAuth Corporation

Author-email:

License: Apache modified

Location: /usr/local/lib/python3.11/dist-packages

Requires: charset-normalizer, dsinternals, flask, future, ldap3, ldapdomaindump, pyasn1, pycryptodomex, pyOpenSSL, six

Required-by: crackmapexec, dploot, lsassy, netexec

root@1984:~#

Right off the bat, besides the obvious location, we now have a downgraded version of impacket. Why is that? For starters, PyPI might not have been updated with the latest release of the package, while the Debian Python Team has taken the lead on that one, building the 0.12.0.dev1 release, as opposed to 0.11.0. Let's now try running some of our favorite impacket examples and see what happens

Sure enough, we definitely broke system packages! Even worse, the above error output doesn't even say much about what's actually wrong; it just complained that NTLMRelayxConfig has no attribute setAddComputerSMB. This attribute could have been added in the newer release of the package, or a result of conflicting import paths; one would have to really dig into it, line by line, to figure out where/what the problem is

Fixing the mess

The million-dollar question is: how does one fix this dependency hell? The answer is quite simple, really. All we need to do is filter those packages located at /usr/local/lib/python*/dist-packages, and uninstall them with elevated privileges much like they were originally installed. At this point, saving the package list to a file can be a good idea in case we want to install some of those packages properly later. For the purpose of this demo, I am going to have a bunch of externally-managed packages installed via pip so we can take a look at automating an otherwise tedious process

┌──(test㉿1984)-[~]

└─$ pip list --path /usr/local/lib/python3.11/dist-packages/

Package Version

------------------ ---------

aesedb 0.1.6

aiosmb 0.4.11

aiowinreg 0.0.12

asn1crypto 1.5.1

asyauth 0.0.21

asysocks 0.2.13

blinker 1.8.2

certifi 2024.8.30

cffi 1.17.1

chardet 5.2.0

charset-normalizer 3.3.2

click 8.1.7

colorama 0.4.6

cryptography 43.0.1

dnspython 2.6.1

dsinternals 1.2.4

Flask 3.0.3

future 1.0.0

h11 0.14.0

idna 3.8

impacket 0.11.0

itsdangerous 2.2.0

Jinja2 3.1.4

ldap3 2.9.1

ldapdomaindump 0.9.4

lsassy 3.1.12

markdown-it-py 3.0.0

MarkupSafe 2.1.5

mdurl 0.1.2

minidump 0.0.24

minikerberos 0.4.4

msldap 0.5.12

netaddr 1.3.0

oscrypto 1.3.0

prompt_toolkit 3.0.47

pyasn1 0.6.1

pycparser 2.22

pycryptodomex 3.20.0

Pygments 2.18.0

pyOpenSSL 24.2.1

pypykatz 0.6.10

requests 2.32.3

rich 13.8.1

six 1.16.0

tabulate 0.9.0

tqdm 4.66.5

unicrypto 0.0.10

urllib3 2.2.3

wcwidth 0.2.13

Werkzeug 3.0.4

winacl 0.1.9

As we can see, there's a considerable number of externally-managed packages that need to be dealt with. Since we're all about automation, let's get creative with a one-liner that does just that

┌──(test㉿1984)-[~]

└─$ pip list --path /usr/local/lib/python3.11/dist-packages/ | cut -d ' ' -f1 | egrep -v '^Package|---*' | tr '\n' ' '

aesedb aiosmb aiowinreg asn1crypto asyauth asysocks blinker certifi cffi chardet charset-normalizer click colorama cryptography dnspython dsinternals Flask future h11 idna impacket itsdangerous Jinja2 ldap3 ldapdomaindump lsassy markdown-it-py MarkupSafe mdurl minidump minikerberos msldap netaddr oscrypto prompt_toolkit pyasn1 pycparser pycryptodomex Pygments pyOpenSSL pypykatz requests rich six tabulate tqdm unicrypto urllib3 wcwidth Werkzeug winacl

We used cut -d ' ' -f1 to simply grab the first thing that's not a space, which happens to be the package names. We then egrep -v '^Package|---*' to filter out irrelevant output that would break the uninstall process since Package and --------- are obviously not valid Python packages. Finally, we used tr '\n' ' ' to translate newlines into spaces instead. Now that we got the desired output, let's incorporate it into the final pip command

┌──(test㉿1984)-[~]

└─$ sudo pip uninstall -y $(pip list --path /usr/local/lib/python3.11/dist-packages/ | cut -d ' ' -f1 | egrep -v '^Package|---*' | tr '\n' ' ')

Found existing installation: aesedb 0.1.6

Uninstalling aesedb-0.1.6:

Successfully uninstalled aesedb-0.1.6

Found existing installation: aiosmb 0.4.11

Uninstalling aiosmb-0.4.11:

Successfully uninstalled aiosmb-0.4.11

Found existing installation: aiowinreg 0.0.12

Uninstalling aiowinreg-0.0.12:

Successfully uninstalled aiowinreg-0.0.12

...

To confirm, we can run the listing again, and sure enough, all those externally-managed packages are now a thing of the past

Closing thoughts

Luckily, pip is now becoming more a thing of the past, and I do hope it gets sunset soon. Switches like --break-system-packages have been added as a deterrent to stop users from, well, breaking system packages. I cannot stress enough how terrible an idea it is to keep running everything as a privileged user all the time. Again, it does way more harm than good, and even if you do know what you're doing, you're still very much prone to making mistakes; we're all human, remember? We do make mistakes. Should you need to install Python packages, search the package repos for them first using apt search. If they exist, they will be prefixed with python3-. If they don't exist, you can always create virtual environments that will take care of path separation for you, and avoid breaking your currently installed packages

Further reading

- https://www.kali.org/blog/kali-linux-2023-1-release/#python-updates--changes

- https://peps.python.org/pep-0668/

- https://docs.python.org/3/library/venv.html

Exhibit D: Look how they massacred my perms

The basics

Let's talk about permission bits for a moment. Assume we have a file with the following permission bets set: -rwxrwxrwx. It should already be obvious there's some sort of a pattern here, but in case that wasn't clear enough, let's dissect it

We notice there are 4 main segments to these bits

# See chmod(1) for more information

r: read

w: write

x: execute (or search for directories)

X: execute/search only if the file is a directory or already has execute permission for some user

s: set user or group ID on execution

t: restricted deletion flag or sticky bit

- [rwx] [rwx] [rwx]

The first field can either be a - or a d for file, or directory, respectively

The first segment of [rwx] is owner permissions. Second is group, and third is "others". The account that first created the file would be its owner, and if the account belongs to a certain group, the file might also belong to that same group; meaning other members of the same group might be able to read the file as well, given correct permission bits are set. The final segment refers to others, meaning anyone else who's not 1) the owner, and 2) part of the group that owns the file

If you've ever looked up file permissions, you must have come across something like chmod 0777 <file>. What do these numbers mean? This would be what's known as numeric mode. Permission bits can be represented by octal digits, ranging from 0-7, derived by adding up the bits with the values 4, 2, and 1. First digit (0 in this case) selects the set user ID, set group ID, and restricted deletion/sticky attributes. Second digit selects the permissions for the user who owns the file (read (4), write (2), and execute (1)). Third selects permissions for other users in the same group, and fourth selects permissions for other users not in the file's group

In the case of -rwxrwxrwx, we agreed that we have 3 segments; rwx for each. Let's add them up; r = 4, w = 2, x = 1

4+2+1=7, therefore the first digit becomes 7. Same for the second and third, ergo 777. First digit, when omitted, is assumed to be a leading zero

Now, what if the file permissions are as follows: -rw-------. The segments are: rw-, ---, and ---. Add them up: 4+2+0=6. Omitted digits are zeros, remember? Therefore, octal permissions are 0600 in this case, meaning only the file owner has read and write permissions to the file, and everyone else cannot read, write, or execute/search for the file. Now you know why OpenSSH private keys are set with these permission bits by default; no one else should ever be able to read them but their respective owner alone. We've got the basics covered, yes? Let's move on

Why permissions are important

Your file system is not up for public demonstration, hey. Every user or group on the system should be able to enjoy the right to privacy to their files and how they want to control them. For example, I think we can all agree that the shadow file /etc/shadow should never, ever, be publicly readable by anyone waltzing in on the system without proper access rights, yes? It's the database that holds the hashed passwords of every user on the system after all. Imagine how easy it would have been if everyone could read that database, let alone modify it. From a security standpoint, permission bits can be considered the bare minimum anyone can adopt to place any sort of restrictive access controls to files and directories in the file system

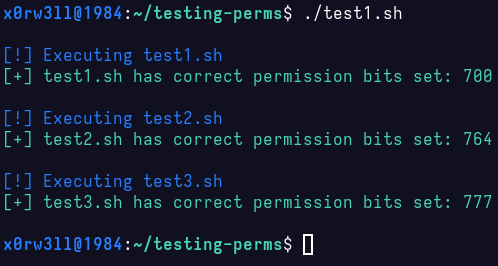

Demo I: Fictitious perms for demonstration purposes

For this demo, I've set up 3 scripts with different permission bits set. Each script checks its own permissions, and if they match, it calls the next script which does the same. This specific demo will highlight one thing many like to do, with little-to-zero idea what it does or why: sudo chmod -R 777 /path/to/some/directory

Before we go ahead, this is something you should never do unless you absolutely know what you're doing and why you're doing it. Do not follow random "advice" you find on forums, even if some of the answers swear it worked for them. Just because something works, it doesn't mean it was done correctly

Let's have a look at what the scripts do, and what their permissions are

x0rw3ll@1984:~/testing-perms$ ll

total 12

-rwx------ 1 x0rw3ll x0rw3ll 393 Sep 14 14:44 test1.sh

-rwxrw-r-- 1 x0rw3ll x0rw3ll 393 Sep 14 14:44 test2.sh

-rwx--x--x 1 x0rw3ll x0rw3ll 385 Sep 14 14:44 test3.sh

x0rw3ll@1984:~/testing-perms$ for i in `ls`; do echo $i; cat $i; echo; done

test1.sh

#!/usr/bin/bash

r="\e[31m"

g="\e[32m"

b="\e[34m"

e="\e[0m"

perms=`stat -c %a $0`

filename=`echo $0 | cut -d '/' -f2`

printf "\n%b[!] Executing $filename%b\n" $b $e

if [ $perms == 700 ]

then

printf "%b[+] $filename has correct permission bits set: $perms%b\n" $g $e

./test2.sh

else

printf "%b[-] $filename has incorrect permission bits set: $perms -- Expected: 700%b\n" $r $e

exit 1

fi

test2.sh

#!/usr/bin/bash

r="\e[31m"

g="\e[32m"

b="\e[34m"

e="\e[0m"

perms=`stat -c %a $0`

filename=`echo $0 | cut -d '/' -f2`

printf "\n%b[!] Executing $filename%b\n" $b $e

if [ $perms == 764 ]

then

printf "%b[+] $filename has correct permission bits set: $perms%b\n" $g $e

./test3.sh

else

printf "%b[-] $filename has incorrect permission bits set: $perms -- Expected: 764%b\n" $r $e

exit 1

fi

test3.sh

#!/usr/bin/bash

r="\e[31m"

g="\e[32m"

b="\e[34m"

e="\e[0m"

perms=`stat -c %a $0`

filename=`echo $0 | cut -d '/' -f2`

printf "\n%b[!] Executing $filename%b\n" $b $e

if [ $perms == 777 ]

then

printf "%b[+] $filename has correct permission bits set: $perms%b\n\n" $g $e

else

printf "%b[-] $filename has incorrect permission bits set: $perms -- Expected: 777%b\n\n" $r $e

exit 1

fi

x0rw3ll@1984:~/testing-perms$

Fairly straight forward. Let's see what happens when we execute

Now, let's do the stupid thing; chmod 777 * inside the testing-perms directory, and see how that plays out

Bear in mind you'll likely never run into a situation where you'll be given some handy guide with all the correct file permissions outlined therein. Often times, permissions will be provided as-is, and should not be modified unless, again, you know what you're doing and why. Imagine you did something incredibly stupid like sudo chmod -R 0777 /; this is 100% guaranteed to break your system beyond actual repair. There's just no way to keep track of which file(s) had which permissions, not to mention that it would take literal ages to go through every single file that was affected. It gets worse; at least sudo prompts you for a password (given the token's expired). Imagine running that as root, without paying attention to what file/directory you're modifying. Good luck with that!

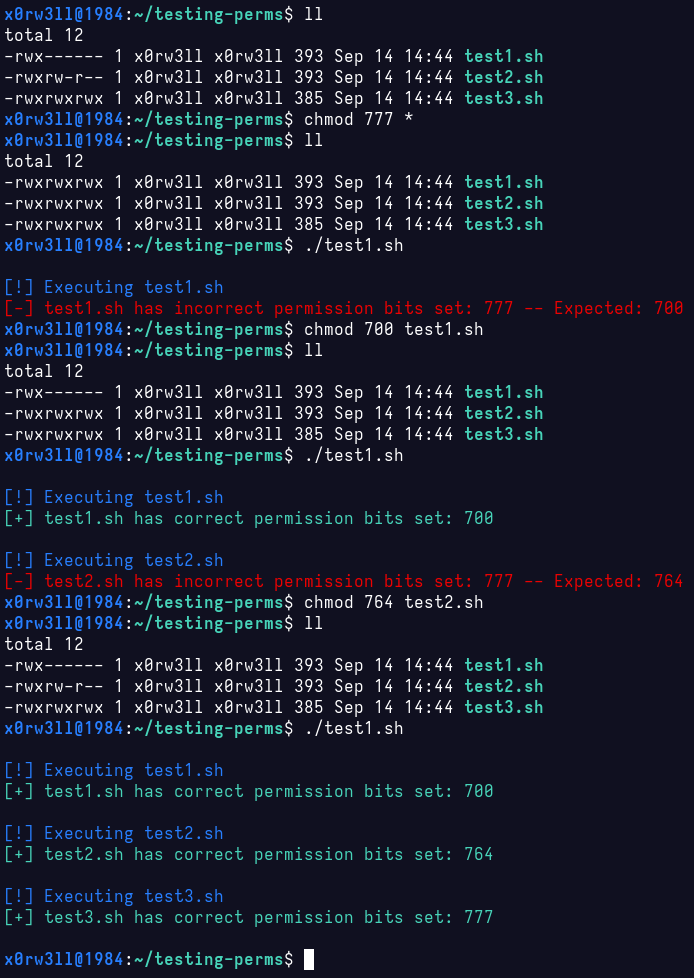

Demo II: OpenSSH keys

This one is more functional, and is often a point of headaches. The principal is the same, really, but I'm inviting you to think about what happens when you, say, sudo wget http://target/main.php?include=/path/to/id_rsa. Or when you download an OpenSSH private key file without remembering to set the proper permissions to it. If you've ever connected to, say, an AWS EC2 instance, you might remember it instructing you to set the key perms to 0400 before connecting. 0400 is more restrictive than 0600 since only the read bit is set, but no write is allowed

Closing thoughts

Hopefully those demos give you better insights into avoiding bad habits. Permissions are no joke, and can be a massive security risk if set incorrectly. Moreoever, you should always read error output; error messages are there to help you diagnose issues and troubleshoot them

Exhibit E: Can't see me

About DISPLAY

Let's talk about environment variables once more, specifically the DISPLAY variable this time. This variable, when set, tells programs what the current display is so they can draw their graphics on that display. Easy, right? Usually, it's set to :0, but it can also be something like :0.0. You can also have multiple displays, and instruct each program to draw its graphics by setting the DISPLAY variable beforehand. So who has the authority to those displays? The user running them!

After booting up the system, you're greetd (SWIDT?) with the login manager where you're prompted for your username and password. Once you're logged in, and you can already see the desktop environment, your username with which you logged in now controls that display that's set. It would be such a shame if someone else were to login, remotely, to your system as another user and be able to just see what you're looking at, right? Not only is it a massive security breach, obviously, but it also doesn't make sense. Well, what if you, being physically on the computer, switch users to root, and try running graphical applications? It's still the same. From your system's perspective, those are still very much two completely different users

This one is going to be very short and straight forward: a few examples of programs that can and will break when trying to run everything as sudo/root

IMPORTANT: Browser security

I believe I'd already alluded to that bit earlier, but I'm going to go ahead and emphasize it once more: browsers should never be run as root. Your browser is your gateway to the World Wide Wild Web. You'll see this in action as we try to run Chromium as a privileged user shortly

General word of caution

Whenever possible, and unless otherwise explicitly stated, do not try running graphical programs as a privileged user. One more thing to keep in mind is that not all environment variables are propagated to/shared with other users. This latter point explains why you can't just open or see graphical programs run as root/sudo since their DISPLAY environment variable is not set to your logged in user's

Exhibit F: Merciless killing

Dive right in

Last, but not least: making typos while killing processes. Imagine you want to kill PID 2944, but ended up killing PID 294 instead? The following happens

Notice how the colors changed? That signifies the login shell, i.e. Bash, was killed and thereby kicking the root user out of its session. Imagine you did this in the middle of something very important, like halfway through upgrading the system, flashing hardware, or what have you; it can be catastrophic, especially when there isn't really anything to ask whether you really meant to kill a specific process

Bash

Disclaimer: this entry is Bash-specific; some of the tips outlined below might or might not intersect with other shells

NB: Ctrl+ shall be annotated C-. Alt+ shall be annotated M- (as in Meta)

Using an $EDITOR for editing [long] commands

POV you're typing up a long command, and the terminal starts playing tricks on you. Tricks like the cursor moving all the way back to the beginning of the line, [visually] destroying your PS1, and part or all of the command being input. This is often a byproduct of terminal resizing. Let's say you started a maximized terminal window, say 50 rows 190 columns, then you decided to split the terminal vertically. The terminal size is now, say, 50 by 94. Dynamic sizing may not always be 100% reliable in some cases, so what's the best options here?

We can edit the commands using an editor, as opposed to using the shell prompt. I personally prefer using VIM for many reasons, with keybinds and convenience specifically taking the cake. Your preferred editor might be something else, so we're gonna wanna make sure we set the default editor first before proceeding

$ sudo update-alternatives --config editor

There are 4 choices for the alternative editor (providing /usr/bin/editor).

Selection Path Priority Status

------------------------------------------------------------

0 /bin/nano 40 auto mode

1 /bin/nano 40 manual mode

2 /usr/bin/nvim 30 manual mode

* 3 /usr/bin/vim.basic 30 manual mode

4 /usr/bin/vim.tiny 15 manual mode

Press <enter> to keep the current choice[*], or type selection number:

It's simple, really; all you have to do is input the Selection index that corresponds to the editor you want to set as a default. I already had vim.basic selected by previously inputting 3. Yours may very well start at 0 (/bin/nano), so you can hit Enter to leave the selected option as-is, or input another index

Next, we'll use Bash's handy edit-and-execute-command by invoking the shortcut combination (Ctrl+x Ctrl+e), or (C-x C-e) as Bash prefers to annotate it in the man-pages. The concept is simple: enter (or edit) whatever command you have, save the buffer and quit to execute said command, or quit without saving to abort it. What happens when you edit a command using an editor is that this command is saved in a temporary file in /tmp/bash-fc.{random-identifier}, then either the command(s) stored in that file are executed (upon saving the buffer and quitting), or the file gets deleted (upon quitting without saving)

Character repitition

This is for all the buffer overflow lovers out there. Have you ever caught yourself doing something silly like python -c 'print("A"*200)'? Let's look at the numbers, Jim; that's about 31 keystrokes, right?

Let's bring those down to 6. Alt+{count}, followed by the character we want repeated, "A" in this case, does just that. Try Alt+200 A; you hold down the Alt key while inputting 2,0,0, release the Alt key, then Shift+a (A)

What about all them hash-passers? I'm talking specifically about the LM portion of NTLM hashes where you want to pass 32 zeros. As you might have already realized, you cannot pass digits as literals for readline. So, how exactly do you do M-32 0 if it's gonna end up thinking you want to repeat 320 characters, whereas you really want 32 zeroes? Well, C-v!

The combo goes as follows: M-32 C-v 0. That is: count 32 (M-32), add the next character (0) typed to the line verbatim (C-v)

Movement

You've entered a somewhat long command, and then you realized you messed up a certain word at the beginning of it, middle, or wherever. Do you keep furiously pressing the arrow keys on your keyboard to move the cursor around? No...No, you do not, my friend. You learn how to do things faster and more efficiently, so let's cover some basics

C-a: move the cursor to the start of the current lineC-e: move the cursor to the end of the current lineC-f: move the cursor one character forwardC-b: move the cursor one character backwardM-f: move the cursor one word forwardM-b: move the cursor one word backward

We can also combine the beauty of readline's convenience with some of the above movements. If you know your way around VIM movements, this part is gonna be breezy for you. Let's have a look at the following example

$ echo The quick brown fox jumps iver the lazy dog|| # where || indicates the cursor position

Let's say we want to jump back to the word "iver" to fix the typo. We can do so by issuing the following combo: M-4-b. That is, move the cursor 4 words back, where "dog" is the first word, "lazy" second, "the" third, and finally "iver" fourth

File/directory name typos

Let's admit it, we've all been there. ls /user/bin, or ls /usr/lin, followed by the inevitable "ugh". No one likes that. Did you know that Bash supports spelling correction? There are some caveats, but I'll leave those for you to figure out. Hint: chained commands, cursor position, starting versus ending characters

Let's have a look at the following example where we messed up not one, but three words. Normally, we'd either rewrite the entire thing, or go back one character/word at a time, which is not cool

$ ls -l /user/bim/vash

So how do we fix this quickly? C-x s. That is Ctrl+x then s. It really is that simple. We go from /user/bim/vash to /usr/bin/bash. Pretty cool, eh?

Text manipulation

C-d: delete the character under the cursor (forward deletion)M-d: delete the word under the cursor (forward deletion)C-w: delete one word behind the cursorC-u: delete everything behind the cursorM-r: revert changes made to current lineM-#: comment out the current line (useful if you wanna hold onto a command for later reference)M-t: transpose words behind the cursor (useful for flipping argument ordering, for example)C-t: transpose characters behind the cursor (useful for fixing typos likeclaer=>clear)M-l: lowercase word under the cursorM-u: uppercase word under the cursorM-c: capitalize word under the cursor

Macros

Let's say there's a handful of repeating commands/functions that you'd like to issue, but you couldn't be bothered to write a script/alias/function for it, or you simply only need them for that one shell session. This is where keyboard macros shine. The process is as follows: start recording the macro, type out (and/or execute) whatever commands to be recorded, end the macro recording, and finally execute the macro at any point later in the same shell. Here's an example

# Ctrl+x (

$ echo hello world # this is where you start typing

hello world

# Ctrl+x )

# Notice we ended the recording _after_ executing the command

# meaning invoking the macro will _also_ execute the command

# Ctrl+x e

$ echo hello world

hello world

Functions & Aliases

Hot tip: don't flood your ~/.bashrc with function/alias declarations. You can offload those to ~/.bash_aliases instead if your distro sources it by default

This is going to include some of the aliases I personally like to use, so here goes nothing

Aliases

alias update='sudo apt update' # update the repo index

alias uplist='apt list --upgradable' # show packages pending upgrade

alias upgrade='sudo apt full-upgrade -y' # full-upgrade, yes

alias _cleanup='sudo apt autoclean && sudo apt autoremove'

alias _wg='sudo wg-quick up wg0'

alias _diswg='sudo wg-quick down wg0'

alias ipa='ip -br a'

alias ipr='ip r'

alias _jctl='sudo journalctl --vacuum-time=1d' # clean up the journal

alias xc="xsel -b -i < $1" # Depends: xsel - copy the contents of file ($1) into clipboard: xc /path/to/file

alias cnf="command-not-found --ignore-installed $1" # for when you want a quick way to figure out which packages provides a certain command

alias kb='curl -L https://www.kernel.org/finger_banner' # get the latest kernel release versions

alias cpowersave='sudo cpupower frequency-set -rg powersave' # Depends: linux-cpupower

alias cschedutil='sudo cpupower frequency-set -rg schedutil'

alias cperformance='sudo cpupower frequency-set -rg performance'

alias bw='bitwise' # Depends: bitwise

Functions

# For when you're using a VPN, but would like to execute some command

# using your non-VPN connection

utnl () {

# short for un-tunnel (Home network _only_)

if [[ $# -eq 0 ]]

then

echo "[!] Usage: $FUNCNAME <IFACE>"

else

GW=$(ip r | awk '/default/ { print $3 }')

SUB=${GW%.*}

sudo ip netns add home

sudo ip netns exec home ip link set lo up

sudo ip link add link $1 name home0 type macvlan

sudo ip link set home0 netns home

sudo ip -n home a add $SUB.250/24 metric 1024 brd + dev home0

sudo ip netns exec home sysctl -q net.ipv6.conf.home0.disable_ipv6=1

sudo ip netns exec home sysctl -qp 2>/dev/null

sudo ip -n home link set home0 up

sudo ip -n home r add default via $GW

fi

}

# To be used after utnl eth0/wlan0 has already been started

nex () {

# short for netns-exec

y="\e[33m"

r="\e[31m"

m="\e[35m"

g="\e[32m"

e="\e[0m"

cmd=$( printf '%q ' "${@:1}" )

if [[ $# -eq 0 ]]

then

echo "[!] Usage: $FUNCNAME <command> [args]"

elif [ $1 = "destroy" ]

then

if sudo ip netns delete home 2>/dev/null

then

printf "%b[+] home network namespace destroyed%b\n" "$g" "$e"

else

printf "%b[-] home network namespace not found. Skipping...%b\n" "$r" "$e"

fi

elif echo $1 | grep -q firefox

then

if ps aux | grep firefox | grep -v grep 1>/dev/null

then

printf "%b[-] Close already running instance(s) of Firefox and try again%b\n" "$r" "$e"

else

printf "[+] Executing %b| %s |%b on %bhome%b as %b%s%b\n\n" "$y" "$cmd --display=:1" "$e" "$m" "$e" "$g" "$USER" "$e"

sudo ip netns exec home runuser - $USER home -c "$cmd --display=:1"

fi

else

printf "[+] Executing %b| %s |%b on %bhome%b as %b%s%b\n\n" "$y" "$cmd" "$e" "$m" "$e" "$g" "$USER" "$e"

sudo ip netns exec home runuser - $USER home -c "$cmd"

fi

}

# Compare SHA256 checksums (useful for downloaded ISOs/packages)

_checksum () {

g="\e[32m"

r="\e[31m"

e="\e[0m"

if [[ $# -lt 2 ]]

then

echo "[!] Usage: $FUNCNAME <file> <SHA256>"

else

[ "$(sha256sum $1 | cut -d ' ' -f1)" == "$2" ] && printf "%b\n[+] SHA256 checksum OK\n\n%b" "$g" "$e" || printf "%b\n[-] SHA256 checksum mismatch\n\n%b" "$r" "$e"

fi

}

# dmesg logs, separating message levels to different output files

dlogs () {

dmesg -t > dmesg_current

dmesg -t -k > dmesg_kernel

dmesg -t -l emerg > dmesg_current_emerg

dmesg -t -l alert > dmesg_current_alert

dmesg -t -l crit > dmesg_current_crit

dmesg -t -l err > dmesg_current_err

dmesg -t -l warn > dmesg_current_warn

dmesg -t -l info > dmesg_current_info

}

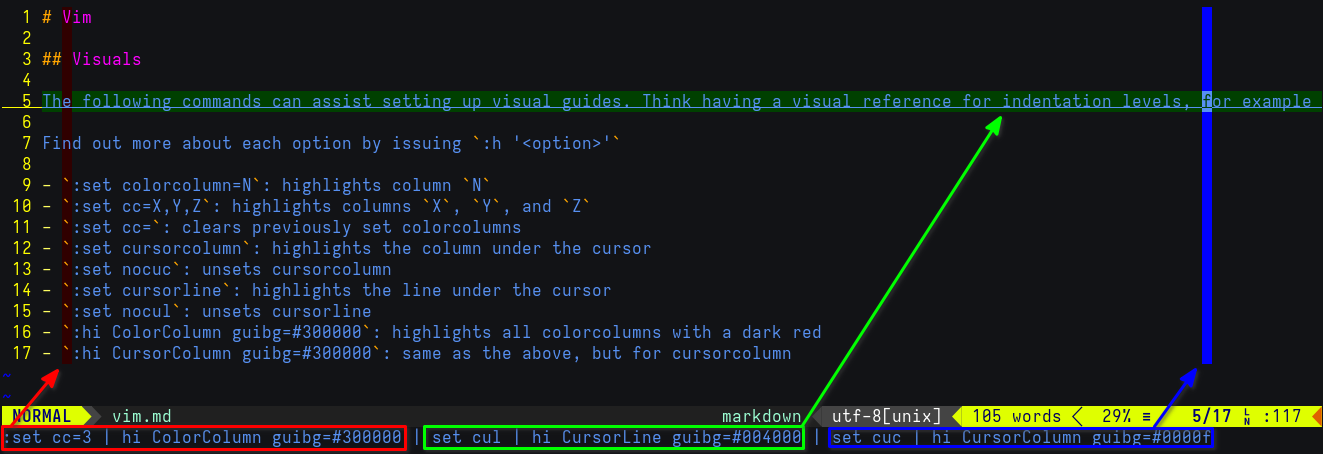

VIM

Visuals

The following commands can assist setting up visual guides. Think having a visual reference for indentation levels, for example

Find out more about each option by issuing :h '<option>'

- (Ex-mode)

:set colorcolumn=N: highlights columnN - (Ex-mode)

:set cc=X,Y,Z: highlights columnsX,Y, andZ - (Ex-mode)

:set cc=: clears previously set colorcolumns - (Ex-mode)

:set cursorcolumn: highlights the column under the cursor - (Ex-mode)

:set nocuc: unsets cursorcolumn - (Ex-mode)

:set cursorline: highlights the line under the cursor - (Ex-mode)

:set nocul: unsets cursorline - (Ex-mode)

:hi ColorColumn guibg=#300000: highlights all colorcolumns with a dark red - (Ex-mode)

:hi CursorColumn guibg=#300000: same as the above, but for cursorcolumn

Movement

- (NORMAL)

3w: jump 3 words forward (cursor is placed at the beginning of the Nth word) - (NORMAL)

5b: jump 5 words backward - (NORMAL)

gg: jump to top (file) - (NORMAL)

G: jump to bottom (file) - (NORMAL)

zz: position line under the cursor at the middle of the screen - (Ex-mode)

:N: jump to line #N $ vim <file> +N: open<file>placing the cursor at lineN

Splitting

- (Ex-mode)

:sp <file>: open<file>in a horizontally-split window - (Ex-mode)

:term: open a terminal in a horizontally-split window - (Ex-mode)

:vsp <file>: open<file>in a vertically-split window - (Ex-mode)

:vert term: open a terminal in a vertically-split window - (NORMAL)

Ctrl+ww: cycle between splits (replace secondwwithh|j|k|lto move in a specific direction between splits)

Text manipulation

- (Ex-mode)

:4,12s/replace_me/replaced/g: replace all (/g) instances ofreplace_mein lines 4 through 12 withreplaced - (Ex-mode)

:50,100d: delete lines 50 through 100 - (NORMAL)

dd: delete entire line under cursor - (NORMAL)

yy: yank (copy) entire line under cursor

Misc

$ vim -x <file>: create a blowfish2-encrypted<file>- (Ex-mode)

:tabnew <file>: open<file>in a new tab - (NORMAL)

Shift+K: view API reference for the function under the cursor (if supported/found) - (NORMAL)

gt: go to next tab - (NORMAL)

m<char>: set a mark usingchar. Example:masets a mark for the lettera - (NORMAL)

'<char>: jump to markchar. Example:'ajump to marka - (NORMAL)

ZZ: quit, saving changes - (NORMAL)

ZQ: quit without saving

apt-patterns

Let's talk about advanced package management. In this section, we'll visit one of apt's lesser known features—apt-patterns. If you've ever found yourself jumping hoops trying to manage multiple packages, doing stuff like apt purge $(dpkg -l | grep whatever | cut -d ' ' -f3 | tr '\n' ' '), you're gonna find this entry useful. Let's see how it works

What's a PATTERN?

First things first: semantics. An apt PATTERN is different than a RegEx pattern, and we need to be very mindful of that distinction because it can get confusing when first reading the man-pages. An apt PATTERN is syntax used with apt, and is usually prefixed with special characters, namely a question mark ? for long-form syntax, and a tilde ~ for short-form syntax. An example would be ?installed, versus ~i. With that in mind, ~i and the likes, be it long or short form, is the PATTERN to which we refer in this section. Again, not to be confused with REGEX, which APT-PATTERNS(7) will highlight as such. Now that we got the semantics out of the way, let's put that into practice

Querying packages

Assume you're shopping for packages from the repository. Quickest thing you can do is apt search <package>, and that's great and all, but sometimes we need more than just a quick fuzzy search. That is, we might be looking for specific package archs, versions, exact names, or even sections. The great thing about apt-patterns is the fact that we can specify whether we want to look exclusively for installed packages, and really just have more fine-grained control over the search. Let's say we want to search for installed packages that include json in the name

$ apt list '~i ~njson'

libcjson1/kali-rolling,now 1.7.18-3+b1 amd64 [installed,automatic]

libcpanel-json-xs-perl/kali-rolling,now 4.38-1+b1 amd64 [installed,automatic]

libjson-c-dev/kali-rolling,now 0.18+ds-1 amd64 [installed]

libjson-c5/kali-rolling,now 0.18+ds-1 amd64 [installed]

...

~n stands for name. We could also use the long-form syntax as follows:

$ apt list '~i ?name(json)'

libcjson1/kali-rolling,now 1.7.18-3+b1 amd64 [installed,automatic]

libcpanel-json-xs-perl/kali-rolling,now 4.38-1+b1 amd64 [installed,automatic]

libjson-c-dev/kali-rolling,now 0.18+ds-1 amd64 [installed]

libjson-c5/kali-rolling,now 0.18+ds-1 amd64 [installed]

...

Now, let's try to find packages that are not installed with json in the name. The logical negation operator works as well as you'd expect; simply prefix the pattern with it

$ apt list '!~i ?name(json)'

dwarf2json/kali-rolling 0.6.0~git20200714-0kali1 amd64

dwarf2json/kali-rolling 0.6.0~git20200714-0kali1 i386

ffjson/kali-rolling 0.0~git20181028.e517b90-1.1 amd64

ffjson/kali-rolling 0.0~git20181028.e517b90-1.1 i386

gir1.2-json-1.0/kali-rolling 1.10.6+ds-1 amd64

gir1.2-json-1.0/kali-rolling 1.10.6+ds-1 i386

gir1.2-jsonrpc-1.0/kali-rolling 3.44.1-1 amd64

gir1.2-jsonrpc-1.0/kali-rolling 3.44.1-1 i386

...

As we can see, the results include i386 packages since I have a multi-arch setup. Let's include only amd64 packages

$ apt list '!~i ?name(json) ~ramd64'

dwarf2json/kali-rolling 0.6.0~git20200714-0kali1 amd64

ffjson/kali-rolling 0.0~git20181028.e517b90-1.1 amd64

gir1.2-json-1.0/kali-rolling 1.10.6+ds-1 amd64

gir1.2-jsonrpc-1.0/kali-rolling 3.44.1-1 amd64

gjh-asl-json/kali-rolling 0.0+git20210628.867c5da-1 amd64

golang-easyjson/kali-rolling 0.7.7-1+b8 amd64

...

Now, let's assume you're just window-shopping, metaphorically, for packages under certain sections. Say you want to figure out what games are available in the repos, specifically those with the amd64 arch

$ apt list '~sgames ~ramd64'

0ad-data-common/kali-rolling,kali-rolling 0.0.26-1 all

0ad-data/kali-rolling,kali-rolling 0.0.26-1 all

0ad/kali-rolling 0.0.26-8 amd64

1oom/kali-rolling 1.11-1 amd64

2048-qt/kali-rolling 0.1.6-2+b3 amd64

2048/kali-rolling 1.0.0-1 amd64

3dchess/kali-rolling 0.8.1-22 amd64

...

Querying dependencies

If you're coming from aptitude where it's easy to just aptitude why <package> and it tells you why a package is installed, i.e. what packages DEPEND on said <package>, this is gonna be helpful. Granted it's not going to be exactly the same as aptitude does it, but it's close enough with a vanilla pattern; reason being that aptitude will also list Suggests and Provides, not strictly Depends. I will leave those as an exercise to the reader, but it shouldn't be too difficult to figure out how to combine multiple patterns in one go. Not to mention man apt-patterns will be your trusty resource, as always

Let's try to figure out the reverse dependencies of, say, libx86-1. There are 3 ways to do it; aptitude why libx86-1 (if aptitude is installed), apt rdepends libx86-1 (which can get pretty crowded and is not friendly with package management without Bash-Fu), and apt list '~i ~D(?exact-name(libx86-1))'

aptitude is great because it will trace the reverse dependencies all the way to the top. This will make more sense when seen in output, so let's compare how each command does it

$ aptitude why libx86-1

i kali-linux-default Depends kali-linux-headless

i A kali-linux-headless Depends i2c-tools

i A i2c-tools Recommends read-edid

i A read-edid Depends libx86-1 (>= 1.1+ds1)

$ apt rdepends libx86-1

libx86-1

Reverse Depends:

Depends: read-edid (>= 1.1+ds1)

$ apt list '~i ~D(?exact-name(libx86-1))'

read-edid/now 3.0.2-1.1 amd64 [installed,local]

Notice how aptitude traced rdeps all the way to the top? i.e. kali-linux-default depends on kali-linux-headless, which in turn depends on i2c-tools, which recommends read-edid, which is what depends directly on libx86-1. In the other examples using apt, you don't get that nice relational table highlighting the dependency list, but you do get the actual reverse depency nonetheless. The difference between apt rdepends and apt list then becomes a matter of cooperability with managing packages. apt rdepends can be used in cases where you quickly want to check the reverse dependencies of certain packages, whereas apt list ... is used when you want to do something with those queried reverse dependencies. We'll look at a similar scenario in this following section

Package management

Here comes the risky part; actually managing packages. Please pay close attention because you can easily mess this one up when first trying it out. In this section, we'll figure out how to handle multiple packages based on specific criteria as specified by PATTERNs. I build custom kernels very frequently, and I tend to keep previous releases in case things go wrong so I'm not stuck with an unbootable kernel. Once I'm certain the new kernel release works as expected, I purge the previous one(s). I could type up apt purge --auto-remove linux-image-* linux-headers-*, but that's just too much unnecessary typing. Let's do this efficiently with apt-patterns. For starters, it's always a good idea to just list packages first to make sure we have the intended ones in place before passing destructive verbs like remove or purge. At the time of writing this entry, I'm on the 6.13.0-rc4 kernel, and I still have previous release candidates 1, 2, and 3. I also have the debug symbols installed, so I'll want those purged as well. Let's list those installed packages, specifying the name RegEx

$ apt list '~i ~n"^linux-(image|headers)-6.13.0-rc[1-3]"'

linux-headers-6.13.0-rc1/now 6.13.0-rc1-00695-gf941996d0cb8-5 amd64 [installed,local]

linux-headers-6.13.0-rc2/now 6.13.0-rc2-00401-g48c84277de4c-2 amd64 [installed,local]

linux-headers-6.13.0-rc3/now 6.13.0-rc3-00674-gffe093fb67f8-3 amd64 [installed,local]

linux-image-6.13.0-rc1-dbg/now 6.13.0-rc1-00695-gf941996d0cb8-5 amd64 [installed,local]

linux-image-6.13.0-rc1/now 6.13.0-rc1-00695-gf941996d0cb8-5 amd64 [installed,local]

linux-image-6.13.0-rc2-dbg/now 6.13.0-rc2-00401-g48c84277de4c-2 amd64 [installed,local]

linux-image-6.13.0-rc2/now 6.13.0-rc2-00401-g48c84277de4c-2 amd64 [installed,local]

linux-image-6.13.0-rc3-dbg/now 6.13.0-rc3-00674-gffe093fb67f8-3 amd64 [installed,local]

linux-image-6.13.0-rc3/now 6.13.0-rc3-00674-gffe093fb67f8-3 amd64 [installed,local]

Note that when we want extended RegEx in the query, we'll ideally want to wrap it in quotation marks. In the above example, we're looking for packages beginning (^) with linux-, followed by either image or headers, 6.13.0-rc, followed by any one digit between 1 and 3 (inclusive). Sure enough, we have all the packages we need, and because the output is a listing, it's extremely easy to handle those packages with apt as-is. For extra caution, however, dear reader, I recommend you simulate the action before going live with it; meaning apt -s <VERB> <packages>. I've already done that in the past, so I know what the syntax is and what packages have those names. Here's how I'll purge those in one go

$ sudo apt purge --auto-remove '~i ~n"^linux-(image|headers)-6.13.0-rc[1-3]"'

[sudo] password for x0rw3ll:

REMOVING:

linux-headers-6.13.0-rc1* linux-headers-6.13.0-rc3* linux-image-6.13.0-rc1-dbg* linux-image-6.13.0-rc2-dbg* linux-image-6.13.0-rc3-dbg*

linux-headers-6.13.0-rc2* linux-image-6.13.0-rc1* linux-image-6.13.0-rc2* linux-image-6.13.0-rc3*

Summary:

Upgrading: 0, Installing: 0, Removing: 9, Not Upgrading: 0

Freed space: 7,121 MB

Continue? [Y/n]

(Reading database ... 788000 files and directories currently installed.)

Removing linux-headers-6.13.0-rc1 (6.13.0-rc1-00695-gf941996d0cb8-5) ...

Removing linux-headers-6.13.0-rc2 (6.13.0-rc2-00401-g48c84277de4c-2) ...

Removing linux-headers-6.13.0-rc3 (6.13.0-rc3-00674-gffe093fb67f8-3) ...

Removing linux-image-6.13.0-rc1 (6.13.0-rc1-00695-gf941996d0cb8-5) ...

update-initramfs: Deleting /boot/initrd.img-6.13.0-rc1

Removing kernel version 6.13.0-rc1 from systemd-boot...

Generating grub configuration file ...

Found background image: /usr/share/images/desktop-base/desktop-grub.png

Found linux image: /boot/vmlinuz-6.13.0-rc4

Found initrd image: /boot/initrd.img-6.13.0-rc4

Found linux image: /boot/vmlinuz-6.13.0-rc3

Found initrd image: /boot/initrd.img-6.13.0-rc3

Found linux image: /boot/vmlinuz-6.13.0-rc2

Found initrd image: /boot/initrd.img-6.13.0-rc2

Found linux image: /boot/vmlinuz-6.12.0

Found initrd image: /boot/initrd.img-6.12.0

Found linux image: /boot/vmlinuz-6.11.2-amd64

Found initrd image: /boot/initrd.img-6.11.2-amd64

Warning: os-prober will be executed to detect other bootable partitions.

Its output will be used to detect bootable binaries on them and create new boot entries.

Adding boot menu entry for UEFI Firmware Settings ...

done

Removing linux-image-6.13.0-rc1-dbg (6.13.0-rc1-00695-gf941996d0cb8-5) ...

...

Removing linux-image-6.13.0-rc3-dbg (6.13.0-rc3-00674-gffe093fb67f8-3) ...

(Reading database ... 758746 files and directories currently installed.)

Purging configuration files for linux-image-6.13.0-rc2 (6.13.0-rc2-00401-g48c84277de4c-2) ...

Removing kernel version 6.13.0-rc2 from systemd-boot...

Purging configuration files for linux-image-6.13.0-rc3 (6.13.0-rc3-00674-gffe093fb67f8-3) ...

Removing kernel version 6.13.0-rc3 from systemd-boot...

Purging configuration files for linux-image-6.13.0-rc1 (6.13.0-rc1-00695-gf941996d0cb8-5) ...

Removing kernel version 6.13.0-rc1 from systemd-boot...

Couldn't have been easier, don't you think? It might take you a little while just to get used to it, but once you do, it's literally all you'll ever need for the most part. It gets things going quickly, and the fact that you can work with the output straight from apt without any one-liners makes it a very attractive choice for system administration. Do note that this section is really only a very basic, rough guide to using apt-patterns correctly. There is so much more for you to discover in the man-pages, which I cannot recommend enough!

Python

Introduction

Love it or hate it, Python can be very powerful for a wide variety of tasks. From simple scripting to full-blown complex programming, Python's got you covered.

While I started off with Python as a first language, I wouldn't recommend it as a first for anyone else. There are key concepts that Python gets to...masquerade from you. However, we're not here to trash-talk Python's shortcomings; that's left as an exercise for the reader at their own discretion and preference. We are here to cover some ground to make Python even more friendlier to the absolute beginner

Assumptions

- This entry assumes some working knowledge with Python. You're not expected to know how to program an entire server application to be able to follow along, but rather be familiar with the basics; variables, functions, and so on

- Python version: 3.12.9 (at the time of writing)

What you need

- A computer, obv; this isn't the 1800s, hey

- Python interpreter

- Text editor

What we'll cover

- The infamous Traceback (most recent call last)

- Getting help()

- Finding and installing Python packages correctly

- Handling externally-managed Python packages with virtual environments

The infamous Traceback (most recent call last)

We've all seen it at some point or another. When first starting out, it can be daunting, and that's understandable; there's some output you might have never learned even existed, and whatever course or tutorial you followed might have thought it would be a great idea to pretend all programs are error-free (spoiler alert: they're not)

Troubleshooting is an essential skill to have, which is why we're starting off strong with this section. We'll begin by learning how to read the traceback output. First things first: the hint is in the output; (most recent call last). What it means is the most recent call is at the bottom of the output, and so we'll be reading it from the bottom upwards. Let's have a look at a nonsensical example with the following code:

#!/usr/bin/env python3

# Filename: tberr.py

from lxml import html

import requests

from requests.exceptions import ConnectionError

def do_request():

try:

resp = requests.get('http://127.1').text

except ConnectionError:

resp = None

return resp

try:

tree = html.fromstring(do_request())

except:

print(type(tree))

Assuming there's no HTTP server running on localhost (yes; loopback address can go by localhost, 127.0.0.1, or 127.1), one can safely assume the requests.get() method is bound to fail, raising a ConnectionError exception. That call was made as part of another function call do_request(). As we can see inside the function body, there's some sort of exception handling, but it doesn't actually handle anything correctly. Neither do the statements that follow the function in the script, but we're doing this for dramatic effect illustration purposes

Next, there's a try/except block that tries to assign the supposedly parsed HTML element (returned from the call to do_request(), which should have returned valid a HTML string, but ended up returning None instead. One can make a very educated guess that html.fromstring() expects a string as an argument, so surely providing None is bound to raise an exception. Here comes the fun part: the except portion of the block should handle such exception, right? Not in this example, I'll tell you what there!

Since html.fromstring() will never come to pass (it received None, remember?), tree will never have been declared in the first place, taking us to the second exception where we try to print the type of tree; a nonexistent Name (i.e. variable in this context). Let's see that in action:

$ chmod +x tberr.py; ./tberr.py

Traceback (most recent call last):

File "/home/$USER/./test.py", line 16, in <module>

tree = html.fromstring(do_request())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/lxml/html/__init__.py", line 849, in fromstring

is_full_html = _looks_like_full_html_unicode(html)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

TypeError: expected string or bytes-like object, got 'NoneType'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/$USER/./test.py", line 18, in <module>

print(type(tree))

^^^^

NameError: name 'tree' is not defined. Did you mean: 'True'?

One might think that the very bottom of that output is the actual issue, but as you've read, it's only one part of an otherwise dependency of issues. Let's start reading from the bottom upwards:

Traceback (most recent call last):

File "/home/$USER/./test.py", line 18, in <module>

print(type(tree))

^^^^

NameError: name 'tree' is not defined. Did you mean: 'True'?

File "/home/$USER/./test.py", line 18: line 18 in/home/$USER/test.pyis where the exact issue took placeNameError: name 'tree' is not defined. Did you mean: 'True'?:NameErroris the exception, stating that the nametreeis not defined. Python can be helpful sometimes in providing suggestions, but that may not always be as helpful as what meets the eye

Next, we'll move up to the previous/older call:

Traceback (most recent call last):

File "/home/$USER/./test.py", line 16, in <module>

tree = html.fromstring(do_request())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/lxml/html/__init__.py", line 849, in fromstring

is_full_html = _looks_like_full_html_unicode(html)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

TypeError: expected string or bytes-like object, got 'NoneType'

Here, we have to understand how the calls were invoked. File "/home/$USER/./test.py", line 16 calls html.fromstring() on a NoneType. Internally, html.fromstring() makes another call as follows:

File "/usr/lib/python3/dist-packages/lxml/html/__init__.py", line 849, in fromstring

is_full_html = _looks_like_full_html_unicode(html)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

TypeError: expected string or bytes-like object, got 'NoneType'

As we can see, html.fromstring() expected a string (supposedly HTML), but got NoneType instead

Let's recap, annotating the code block this time:

#!/usr/bin/env python3

# Filename: tberr.py

# The following 3 lines import the required libraries

from lxml import html

import requests

from requests.exceptions import ConnectionError

# Nothing happens here upon executing the script _until_

# there's a call to do_request()

def do_request():

try:

resp = requests.get('http://127.1').text

except ConnectionError:

resp = None

return resp

try:

# Try to assign an HTML element from the string returned by

# do_request(). This will fail because do_request() returns

# NoneType on ConnectionError

tree = html.fromstring(do_request()) # this is where execution jumps up to do_request()

except:

# If the above statement fails, execute the following line(s)

# This will also fail since tree will have never been assigned

print(type(tree))

So, logically, our execution flow is as follows:

tree = html.fromstring()=>do_request()do_request()=>requests.get()=> returnsNoneTypeas per handlingConnectionError, rendering step 1 mootprint(type(tree))=> raisesNameErrorsince step 1 never took place (i.e. the assignment of an HTML element to the variabletree)

While this example makes no sense in terms of logic, or common sense for that matter, it should give you an idea on how to read traceback output

Remember:

- Walk your way up the traceback, going from the most recent call to the oldest

- Pay close attention to file names; know what's relevant in your script, verses what's happening internally inside functions provided by libraries

- Pay close attention to line numbers so you can quickly navigate to the offending lines

- Print-debugging is great and all, but debugging with actual debugging tools/libs is paramount and will help you a great deal

- Don't just skim through traceback output, however long it might be. You need to understand what's happening, what's relevant, and why it happened in the first place. In time, your eyes will be trained to tune out the involved libraries, and you'll be mindful of the data types being passed around

- Last, but not least: READ THE DOCS. Familiarize yourself with exceptions so you know what

TypeError,ValueError,NameError, and what the entire assortment of exception classes is

Getting help()

Now that we've covered reading traceback output, we'll move on to another important skill; getting help

You might have learned the bare minimum, just enough to get you through basic scripting/programming tasks, and that's great, but what do you do when you're looking for help?

Ask {insert forum here}? Spam the same question on however many Discord servers you might be on? (seriously, don't do that; it's royally annoying, and wastes everyone's time)

The answer is simple: refer to the API docs. See, the very developers who make this language possible are the only ones who are capable of providing the most accurate technical documentation thereof. That is the first and foremost source everyone learning whatever {insert language/library/framework/API here} should check

With the rise of LLMs AI, things are unfortunately taking a sad turn, in my humble opinion. Everyone touts AI as some silver bullet to all life's problems and burning questions. It is not, however, any of that; it's something that fakes it just enough to sound like it's an SME. We can argue about how "you don't understand how it works" et al., but really, let's not kid ourselves. When there's a tiny little disclaimer that says any variation of the sentence "information may not be accurate", you know this is your first clue to not trust a word that's being generated out of this tin can's /dev/null (yes, that is exactly what you're thinking about right now)

I digress at this point, and we're not here to debate AI; I might write up an entry on everything I think is wrong with how we're dealing with it, but that's for another time. Let's get back to getting help quickly and effectively

Ways to get help

There are at least 4 different ways to get help:

- Straight from the comfort of your terminal:

- Using

pydoc <keyword> - Inside your interpreter shell:

>>> help(<keyword>) - Inside Vim using

Shift+Kto bring uppydoc <keyword>(i.e. Python-specific documentation)

- Using

- Online: https://docs.python.org/3/

As a last resort, you can always look up keywords like "python multiprocessing docs" (or a variation thereof) using your favorite search engine. In that search, you might stumble upon some reply on some forum which cites the official documentation, or provide a decent technical writeup on how 'keyword' works, or explain its concept. Key is to know how to identify good answers and resources from bad ones, and you can only do that when you've read through enough online resources to tell the difference

Finally, let's say you wanted lookup documentation for a function/method that belongs to an imported library, like requests, for example, but you couldn't be bothered to check the Requests library API online. You can opt to do that interactively inside the interpreter shell as follows:

Python 3.12.9 (main, Feb 5 2025, 01:31:18) [GCC 14.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import requests

>>> help(requests.Session) # help(requests.Session()) also works, but no need for extra chars

At this point, your default $PAGER (ideally LESS) is forked with the documentation pertinent to whichever object you passed to help() as follows: (output is truncated for brevity)

Help on Session in module requests.sessions object:

class Session(SessionRedirectMixin)

| A Requests session.

|

| Provides cookie persistence, connection-pooling, and configuration.

|

| Basic Usage::

|

| >>> import requests

| >>> s = requests.Session()

| >>> s.get('https://httpbin.org/get')

| <Response [200]>

|

| Or as a context manager::

|

| >>> with requests.Session() as s:

| ... s.get('https://httpbin.org/get')

| <Response [200]>

|

| Method resolution order:

| Session

| SessionRedirectMixin

| builtins.object

|

| Methods defined here:

|

| __enter__(self)

|

| __exit__(self, *args)

|

| __getstate__(self)

| Helper for pickle.

|

| __init__(self)

| Initialize self. See help(type(self)) for accurate signature.

| ...

Closing thoughs

Don't be afraid to ask for help, or feel discouraged when you first don't understand something. No one starts off an expert, and no one knows all the ins and outs of a language save for those who invented it to begin with, and possibly a very small subset of people. The more you reference official API documentation, the easier and faster it gets for you, and you'll be positive that you're getting the most recent documentation on the topic at hand from the most verifiable source that can provide it

Finding and installing Python packages correctly

This is an important, and a rather quick one, so pay close attention

For the longest time ever, the de-facto go-to method to install Python packages, as suggested on many an online forum, is pip install <package>. This is no longer the case, however, since PEP-668 (See also: Pip install and Python's externally managed)

PEP-668 TL;DR

Assume the following scenario: your system has the python3-requests package installed system-wide. You then decide to also execute pip install requests, or worse, sudo pip install requests (don't do that or recommend anyone do it; it's why we now have PEP-668 because of how terrible that is. See Exhibit C: The case against /usr/local)

Your system now has two packages with the same name, found in different paths. This is where conflicts exist, especially when you have different versions of the same package installed in different directories. PEP-668 finally puts an end to that, preventing misinformed users from inadvertently damaging their environment. I've already covered that in detail in the linked article above, so we're not going to reinvent the wheel here

How to actually find and install packages correctly

The recommendation is to use your system's package manager. Python packages have the python3- prefix, and so this is with which you should be leading your search